Creating an Automated RPM Build Pipeline using GitHub Actions

There's something awesome about Linux packages. Being able to freely access repositories of pre-built software has always been a core part of the Linux universe.

Recently I've been building a lot of packages for eLX and I've realized packages can be relatively simple to put together and the ability to archive and distribute your code is extremely powerful. Contrary to what you might think, you don't have to be a coder to be able to reap the benefits of building & packaging software. What was fascinating to me was that like other tools hijacked from the Linux development community, e.g. GNU make, there's massive value in these tools for system administrators as well.

First off – what is a package, anyways? We're talking about .rpm and .deb files. Those things we drink up from tools like dnf and apt in order to download software. These files are basically archives similar to .rar or .zip files: they're just compressed collections of other files, i.e. they're really nothing fancy.

In the world of distributions downstream from Fedora, we use .rpm files to package software, while projects downstream from Debian use .deb files. All .rpm files start as a .spec file, which defines how to turn some raw source code into a built binary application and package it into an .rpm. Just to get an idea of what I mean, here's an actual spec to build lnav, a command line log navigator written in C.

Name: lnav

Version: 0.11.1

Release: 1%{?dist}

Summary: Curses-based tool for viewing and analyzing log files

License: BSD

URL: http://lnav.org

Source0: https://github.com/tstack/lnav/releases/download/v%{version}/%{name}-%{version}.tar.bz2

BuildRequires: bzip2-devel

BuildRequires: gcc-c++

BuildRequires: libarchive-devel

BuildRequires: libcurl-devel

BuildRequires: make

BuildRequires: ncurses-devel

BuildRequires: openssh

BuildRequires: openssl-devel

BuildRequires: pcre2-devel

BuildRequires: readline-devel

BuildRequires: sqlite-devel

BuildRequires: zlib-devel

%description

%{name} is an enhanced log file viewer.

%prep

%setup -q

%build

%configure --disable-static --disable-silent-rules

%make_build

%install

%make_install

%files

%doc AUTHORS NEWS.md README.md

%license LICENSE

%{_bindir}/%{name}

%{_mandir}/man1/%{name}.1*

Believe it or not, this file has everything needed to be fed into a program called rpmbuild and produce a complete .rpm. Now getting into the nitty gritty of the spec file is beyond the scope of this article but let's suppose you already have one. Wouldn't it be nice to automate the compiling of your code and building of your packages with this spec file you? The technology here of compiling and packaging software is nowhere near new, but how can we marry the old world of packaging .rpm files with the new world of CI/CD?

The World of CI/CD Pipelines

In the past few years, CI/CD has become huge. Continuous Integration & Continuous Delivery refers to the ability of people who work with code to automate many of the tasks that used to be done by hand in such a way that has taken on its own style. CI/CD revolves around the idea of setting in motion a cascading sequence of events once a coder finishes and commits some piece of code to the code base.

One of the younger CI/CD pipeline tools is GitHub Actions. Recently I built a pipeline that would automatically package up the code I had just published into both an .rpm and .deb and publish it as a release asset in GitHub when tagged with a new version number. This is huge! Once the coding is committed, the entire process of building and publishing it in a consumable package form is automated.

The best news is that after front-loading the work by building the pipeline it requires almost zero maintenance. So if you're interested in sharing code, scripts, configurations, or keeping snapshots of them to archive yourself, e.g. your dotfiles, you're about the have the tools.

The Build Pipeline

The pipeline walks through 4 what are called "jobs" in most CI/CD frameworks. Each job completes a task that you define in an individual Docker container that is destroyed when the job has been completed. Here is a brief description of each job:

- First, it's going to download a copy of the most recent commit as raw source code. The pipeline only runs when there is a new version tagged on one of the commits, e.g. v1.4. This typically occurs when new features have been added or some sizable amount of work has been done and it's time for a new release. We're gonna download those plain text files and archive them in a file named something like

softwarename-1.4.tar.gz. - Next, we're going to take our

.specfile which, remember, is like the recipe for how to build the software from source. We have the source code we downloaded in the last step, now we've got this.specfile that has our instructions on how to build it. We're gonna run the recipe on the source code we downloaded insoftwarename-1.4.tar.gzusing a tool calledrpmbuildand it's going to make our package. - Since half the Linux world speaks

.rpmand the other half speaks.deb, we're going to use an awesome tool calledaliento convert our.rpmto a.debwith minimal effort. - Finally, the pipeline is going to take both of these packages which it has cached in the process of stepping through the pipeline and (1) create a new release in GitHub and (2) publish both our Linux packages of our freshly baked software onto GitHub so people can freely download it.

Alright!

Walking Through the Jobs

GitHub Actions, like many other modern automation tools, is a YAML-based tool. However, rather than having a rigid API like some others, the entire system is itself somewhat "package" based. People on GitHub post modules that you can use referred to as "Actions". Using one of these published Actions usually just amounts to a few lines of YAML to carry out some task. To use a published "Action" you use the keyword uses in your workflow. For example:

jobs:

job1:

steps:

- name: Checkout repository

uses: actions/checkout@v2You'll see them throughout the pipeline.

Job #1:

build_tarball:

name: Build source archive

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Replace version in RPM spec

run: sed -Ei 's/(^Version:[[:space:]]*).*/\1${{github.ref_name}}/' ${{ vars.PKG_NAME }}.spec

- name: Create source archive

run: tar -cvf ${{ vars.PKG_NAME }}-${{ github.ref_name }}.tar.gz *

- name: Upload source archive as artifact

uses: actions/upload-artifact@v3

with:

name: ${{ vars.PKG_NAME }}-${{ github.ref_name }}.tar.gz

path: ${{ vars.PKG_NAME }}-${{ github.ref_name }}.tar.gzFirst, we use the actions/checkout@v2 "action" to pull down our code into the current execution environment, which for whatever it's worth, is a container executing the steps of your pipeline.

Next, we go ahead and perform a quick search and replace using sed to update the version number in our spec file that triggered the pipeline in the first place. Then, we're going to archive the code by creating our tar file.

Finally, we're going to go ahead and upload that code as what's called an artifact. This isn't the final upload to our GitHub Release page. Rather, it's a way of putting it aside while the rest of the pipeline runs. Because each job runs in a new container, we need to make use of this artifact cache often in order to pass files from one job to the next.

Job #2:

Here's where the action is:

build_rpm:

name: Build .rpm package

needs: build_tarball

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Replace version in RPM spec so correct source is downloaded when building RPM

run: sed -Ei 's/(^Version:[[:space:]]*).*/\1${{github.ref_name}}/' ${{ vars.PKG_NAME }}.spec

- name: Run rpmbuild on RPM spec to produce package

id: rpm

uses: naveenrajm7/rpmbuild@master

with:

spec_file: ${{ vars.PKG_NAME }}.spec

- name: Upload .rpm package as artifact

uses: actions/upload-artifact@v3

with:

name: ${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.rpm

path: rpmbuild/RPMS/${{ env.ARCH }}/*.rpm

Because this new job starts in a fresh container we're going to start our second job off just like we did the first: by checking out our project with the actions/checkout@v2 action and updating the version in the .spec file.

Then we run the naveenrajm7/rpmbuild@master action which basically runs two rpmbuild commands that produce the rpm behind the scenes. Finally, we upload the .rpm as an artifact just like we did with the raw source code in the first job so we can have access so it in future jobs.

Job #3:

build_deb:

name: Build .deb package

needs: build_rpm

runs-on: ubuntu-latest

steps:

- name: Download .rpm artifact

uses: actions/download-artifact@v3

id: download

with:

name: ${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.rpm

- name: Convert .rpm to .deb

run: |

sudo apt install -y alien

sudo alien -k --verbose --to-deb *.rpm

- name: Upload .deb package as artifact

uses: actions/upload-artifact@v3

with:

name: ${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.deb

path: ${{ vars.PKG_NAME }}*.debYou're probably starting to see the pattern here. This time we're going to, instead of downloading our code, download one of the artifacts we uploaded in the earlier steps. We're going to download the .rpm from the last step and convert it to a .deb for Debian-based systems. To do that we'll run the alien command and upload the resultant .deb as an artifact with the others.

Job #4:

Finally, in the 4th job, we're going to create our release! This is the GitHub event that these files are going to be uploaded with:

release:

name: Create release with all assets

needs: [build_tarball, build_rpm, build_deb]

runs-on: ubuntu-latest

steps:

- name: Download cached rpm, deb, and tar.gz artifacts

uses: actions/download-artifact@v3

- name: Release

uses: softprops/action-gh-release@v1

with:

files: |

${{ vars.PKG_NAME }}-${{ github.ref_name }}.tar.gz/*.tar.gz

${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.rpm/**/*.rpm

${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.deb/**/*.debIn this job, we're going to fetch all those "artifacts" we kept throwing up into the cache at the end of each job. Without any other arguments, the following line will download all items saved in the cache:

- name: Download cached rpm, deb, and tar.gz artifacts

uses: actions/download-artifact@v3After that we've actually pulled down is:

- an archive of the raw code in the format

softwarename-1.4.tar.gz - an

.rpmof the build, e.g.softwarename-1.4.rpm - a

.debof the build, e.g.softwarename-1.4.deb

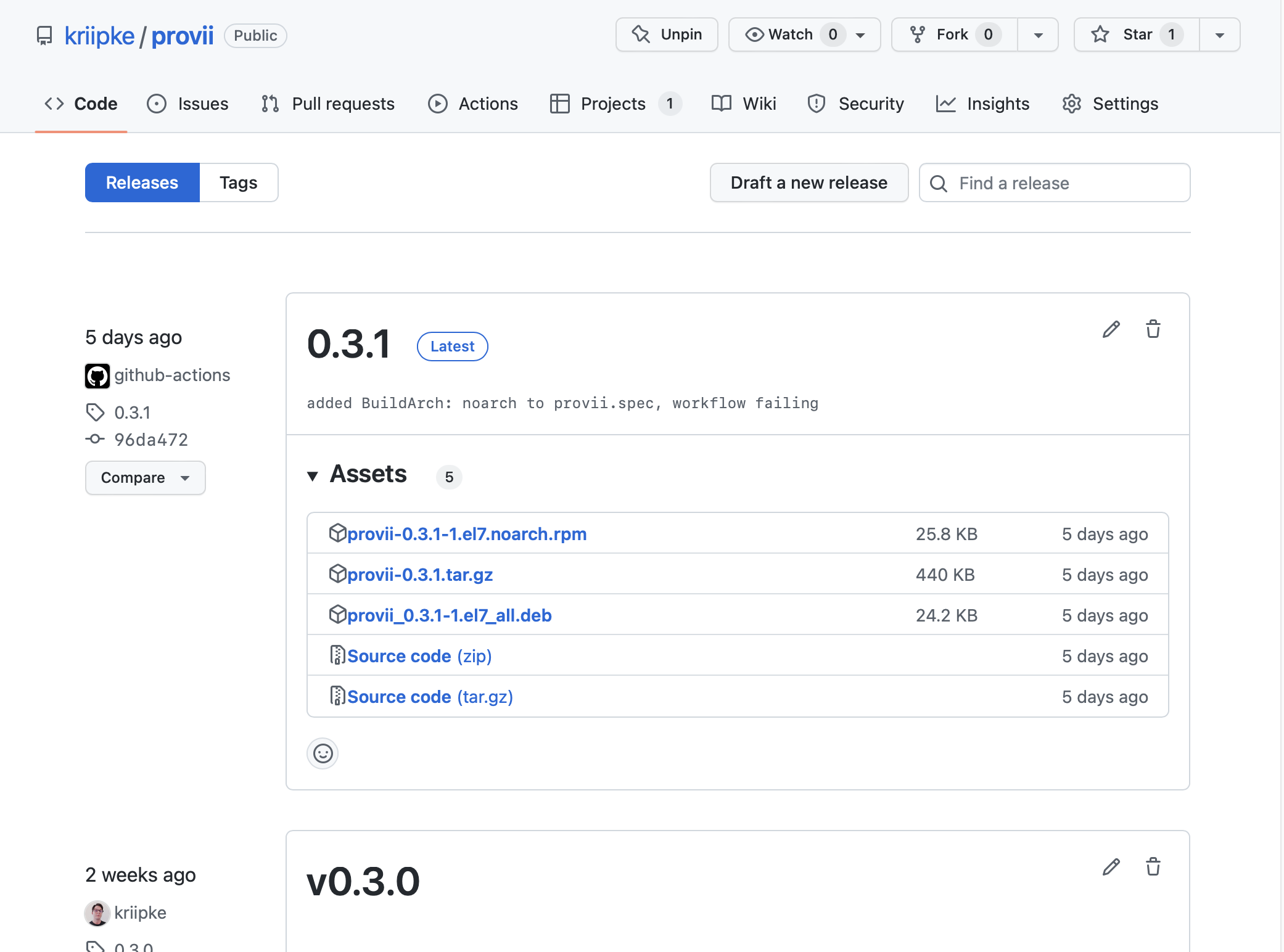

Last we have quite a useful action here called softprops/action-gh-release@v1 that allows us to create a release and attach all our assets to it in the same step. In this step, we upload our artifacts, and voilà! Our code has been shared. It will now be visible on the "Releases" page of our repo:

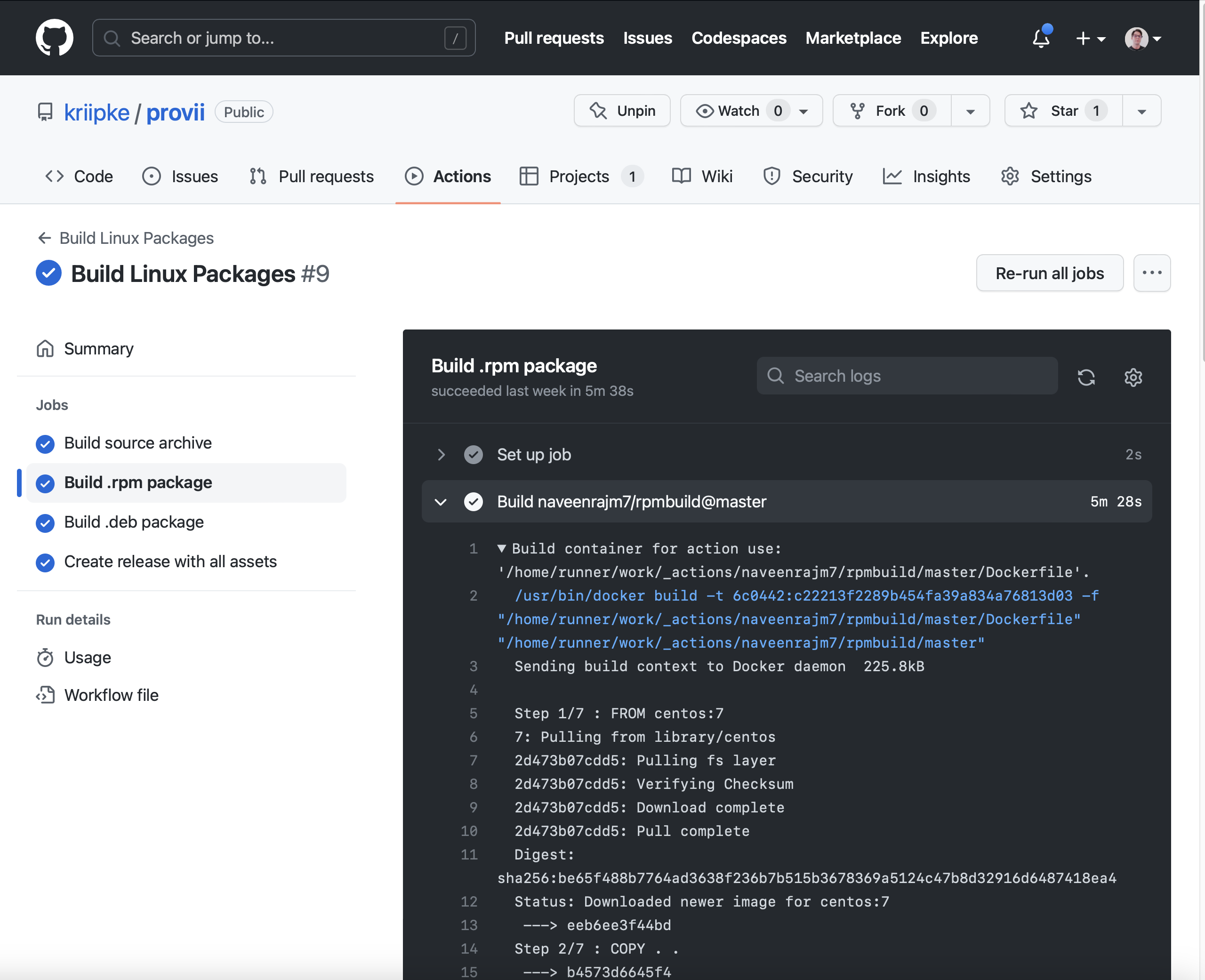

Keep in mind this process is totally automated. Once the code has been committed the pipeline starts running. You can keep tabs on it by navigating to the "Actions" tab at the top of your repository. There's a pretty comprehensive log for you to go through. It will look something like this:

Alright here's the finished copy of the pipeline below!

name: Build Linux Packages

on:

push:

tags:

- "*.*.*"

env:

DIST: el7

ARCH: noarch

jobs:

build_tarball:

name: Build source archive

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Replace version in RPM spec so correct source is downloaded when building RPM

run: sed -Ei 's/(^Version:[[:space:]]*).*/\1${{github.ref_name}}/' ${{ vars.PKG_NAME }}.spec

- name: Create source archive

run: tar -cvf ${{ vars.PKG_NAME }}-${{ github.ref_name }}.tar.gz *

- name: Upload source archive as artifact

uses: actions/upload-artifact@v3

with:

name: ${{ vars.PKG_NAME }}-${{ github.ref_name }}.tar.gz

path: ${{ vars.PKG_NAME }}-${{ github.ref_name }}.tar.gz

build_rpm:

name: Build .rpm package

needs: build_tarball

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Replace version in RPM spec so correct source is downloaded when building RPM

run: sed -Ei 's/(^Version:[[:space:]]*).*/\1${{github.ref_name}}/' ${{ vars.PKG_NAME }}.spec

- name: Run rpmbuild on RPM spec to produce package

id: rpm

uses: naveenrajm7/rpmbuild@master

with:

spec_file: ${{ vars.PKG_NAME }}.spec

- name: Upload .rpm package as artifact

uses: actions/upload-artifact@v3

with:

name: ${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.rpm

path: rpmbuild/RPMS/${{ env.ARCH }}/*.rpm

build_deb:

name: Build .deb package

needs: build_rpm

runs-on: ubuntu-latest

steps:

- name: Download .rpm artifact

uses: actions/download-artifact@v3

id: download

with:

name: ${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.rpm

- name: Convert .rpm to .deb

run: |

sudo apt install -y alien

sudo alien -k --verbose --to-deb *.rpm

- name: Upload .deb package as artifact

uses: actions/upload-artifact@v3

with:

name: ${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.deb

path: ${{ vars.PKG_NAME }}*.deb

release:

name: Create release with all assets

needs: [build_tarball, build_rpm, build_deb]

runs-on: ubuntu-latest

steps:

- name: Download cached rpm, deb, and tar.gz artifacts

uses: actions/download-artifact@v3

- name: Release

uses: softprops/action-gh-release@v1

with:

files: |

${{ vars.PKG_NAME }}-${{ github.ref_name }}.tar.gz/*.tar.gz

${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.rpm/**/*.rpm

${{ vars.PKG_NAME }}-${{ github.ref_name }}-1.${{ env.DIST }}.${{ env.ARCH }}.deb/**/*.debTo add it to your project just copy and paste the contents of the file goes in the .github/workflows folder in your repository. You can name the file anything you want it will be executed regardless. For reference you can see how it's used in my project provii here:

Enjoy!